Why we should (carefully) consider paying kids to learn

Research co-authored by UNSW Business School's Richard Holden shows incentivised education increased kids' proficiency at maths and reading.

Research co-authored by UNSW Business School's Richard Holden shows incentivised education increased kids' proficiency at maths and reading.

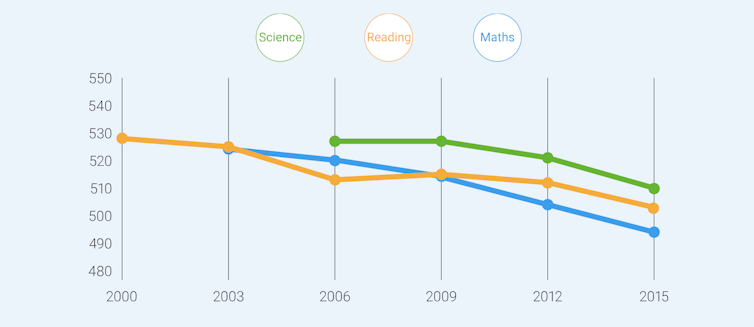

Over the past 15 years, we’ve seen a decline in the performance of Australian school students on international tests. On the Programme for International Student Assessment (PISA), Australia ranks a disappointing 20th in mathematics and 12th in reading. However you feel about standardised tests like NAPLAN and PISA, it certainly isn’t good news that we’re falling behind internationally.

Australian PISA Scores.ACER

Australian PISA Scores.ACER

Over the same period, there has also been a revolution in education research through the use of randomised controlled trials to assess the effectiveness of different education policies. All manner of things have been tried – everything from smaller class sizes to intensive tutoring. And now paying kids to learn.

My coauthors and I did just that in two sets of experiments in Houston, Texas and Washington, D.C. We found if kids are paid for things such as attendance, good behaviour, short-cycle tests, and homework they were 1% more likely to go to school, committed 28% fewer behavioural infractions, and were 13.5% more likely to finish their homework.

This led to a big increase in kids performing at a proficient level in mathematics and reading. This cost money – we distributed roughly AU$7 million in incentives to 6,875 kids. But measured financially, the approach where we gave students money for a number of things (such as behaviour, attendance and academic tasks) produced a 32% annual return on investment.

In Houston, we paid 1,734 fifth graders to do maths homework problems. We paid the parents too, if their child did their homework.

Some 50 schools were given educational software that fit in with the curriculum. Half (25) of those schools were randomly selected to be in the “treatment group”. This group of school kids got AU$2.80 per homework problem they mastered. Parents of the children got AU$2.80 per problem mastered, and teachers were eligible for bonuses of up to AU$14,000.

The 25 control schools got the identical educational software and training, but no financial incentives.

This randomised controlled trial allows for a simple test of the effect of financial incentives. This works because there are a large number of students in both the treatment and control group, and because they were randomly assigned. Differences in other factors like innate ability, home background, or parental involvement average out.

So to understand the true, causal effect of the cash incentives on test scores we can just look at the difference in average test scores between the treatment and control kids.

This is the same principle underlying pharmaceutical trials. For example, some patients might get heart medication, while others get a placebo (a sugar pill). Researchers then look at the difference in heart functioning to figure out whether the medication works.

This approach is the gold standard for understanding the true effect of an intervention – in medicine, economics, or education.

The financial incentives we used in Houston led to children doing lots more homework, and to a fairly large increase in performance on standardised maths tests. But there was an almost equal offsetting decline in performance on reading tests.

The children responded to the incentives all right – by shifting their efforts from reading, which they didn’t receive incentives for, to maths.

The most able 20% of students, based on their prior-year test scores, did way better in maths and no worse in reading. Incentives for the least able 20% of students were a disaster. They did lots more maths problems, did no better on maths tests, and far worse on reading tests.

By contrast, in Washington D.C. we provided incentives for sixth, seventh and eighth grade students on multiple measures, including: attendance, behaviour, short-cycle assessments, and two other variable measures chosen by each school. This led to a 17% increase in students scoring at or above proficiency for their grade in maths and a 15% increase in reading proficiency.

Paying children to study and behave might sound radical, or even unethical. Yet we provide incentives to kids all the time. Most parents use a combination of carrots and sticks as motivation already, such as screen time or treats.

A legitimate concern is that cash incentives might affect intrinsic motivation and turn learning into a transaction rather than a joy. The evidence from our study showed intrinsic motivation actually increased.

Perhaps the harder question is whether it’s ethical to use an approach that won’t help less advantaged students perform better and develop a love of learning.

Nearly two decades of research in the US using randomised control trials has identified the positive causal effect of a range of interventions. These include high-dose tutoring, out-of-school and community-based reading programs, smaller class sizes, better teachers, a culture of high expectations and, yes, financial incentives.

In Australia, we should be open minded and look at the evidence. This will involve carefully designed randomised trials in Australian schools to determine what really works, and what the return on investment is.![]()

Richard Holden, Professor of Economics, UNSW

This article is republished from

The Conversation under a Creative Commons license. Read the

original article.