Over the past few decades, animal sounds have seen a huge surge in research. Advances in recording equipment and analysis techniques have driven new insights into animal behaviour, population distribution, taxonomy and anatomy.

In a new study published in Ecology and Evolution, we show the limitations of one of the most common methods used to analyse animal sounds. These limitations may have caused disagreements about a whale song in the Indian Ocean, and about animal calls on land, too.

We demonstrate a new method that can overcome this problem. It reveals previously hidden details of animal calls, providing a basis for future advances in animal sound research.

The importance of whale song

More than a quarter of whale species are listed as vulnerable, endangered or critically endangered. Understanding whale behaviour, population distribution and the impact of human-made noise is key to successful conservation efforts.

For creatures that spend nearly all their time hidden in the vast open ocean, these are difficult things to study, but analysis of whale songs can give us vital clues.

However, we can’t just analyse whale songs by listening to them – we need ways to measure them in more detail than the human ear can provide.

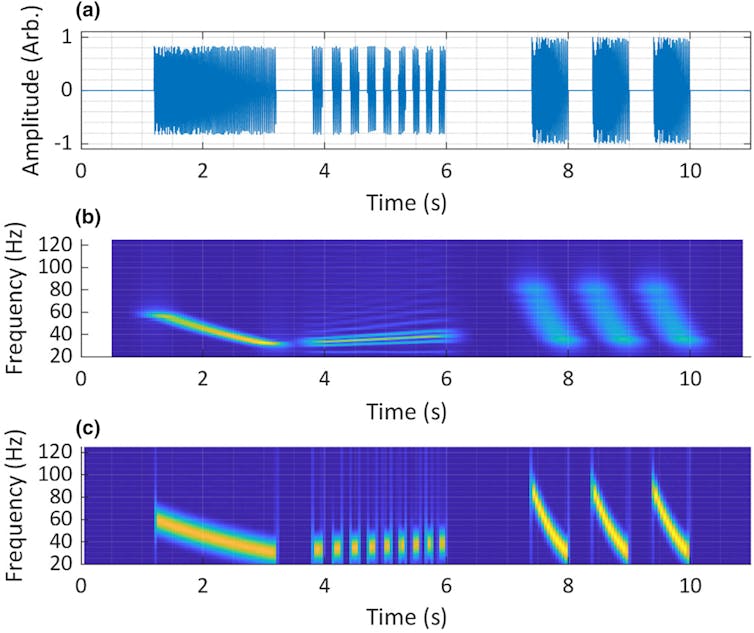

For this reason, often a first step in studying an animal sound is to generate a visualisation called a spectrogram. It can give us a better idea of a sound’s character. Specifically, it shows when the energy in the sound occurs (temporal details), and at what frequency (spectral details).

We can learn about the sound’s structure in terms of time, frequency and intensity by carefully inspecting these spectrograms and measuring them with other algorithms, allowing for a deeper analysis. They are also key tools in communicating findings when we publish our work.

Why spectrograms have limitations

The most common method for generating spectrograms is known as the STFT. It’s used in many fields, including mechanical engineering, biomedical engineering and experimental physics.

However, it’s acknowledged to have a fundamental limitation – it can’t accurately visualise all the sound’s temporal and spectral details at the same time. This means every STFT spectrogram sacrifices either some temporal or spectral information.

This problem is more pronounced at lower frequencies. So it’s especially problematic when analysing sounds made by animals like the pygmy blue whale, whose song is so low, it approaches the lower limit of human hearing.

Before my PhD, I worked in acoustics and audio signal processing, where I became all too familiar with the STFT spectrogram and its shortcomings.

But there are different methods for generating spectrograms. It occurred to me the STFTs used in whale song studies might be hiding some details, and there could be other methods more suited to the task.

In our study, my co-author Tracey Rogers and I compared the STFT to newer visualisation methods. We used made-up (synthetic) test signals, as well as recordings of pygmy blue whales, Asian elephants and other animals, such as cassowaries and American crocodiles.

The methods we tested included a new algorithm called the Superlet transform, which we adapted from its original use in brain wave analysis. We found this method produced visualisations of our synthetic test signal with up to 28% fewer errors than the others we tested.

A better way to visualise animal sounds

This result was promising, but the Superlet revealed its full potential when we applied it to animal sounds.

Recently, there’s been some disagreement around the Chagos pygmy blue whale song: whether its first sound is “pulsed” or “tonal”. These two terms refer to having extra frequencies in the sound, but produced in two distinct ways.

STFT spectrograms can’t resolve this debate, because they can show this sound as either pulsed or tonal, depending on how they’re configured. Our Superlet visualisation shows the sound as pulsed and agrees with most studies that describe this song.

When visualising Asian elephant rumbles, the Superlet showed pulsing that was mentioned in the original description of this sound, but has been absent from all later descriptions. It’s also never been shown in a spectrogram.

Our Superlet visualisations of the southern cassowary call and the American crocodile roar both showed previously unreported temporal details that were not shown by the spectrograms in previous studies.

These are only preliminary findings, each based on a single recording. To confirm these observations, more sounds will need to be analysed. Even so, this is fertile ground for future work.

Ease of use may be Superlet’s greatest strength, even beyond improved accuracy. Many researchers using sound to study animals have backgrounds in ecology, biology and veterinary science. They learn audio signal analysis only as a means to an end.

To improve accessibility of the Superlet transform to these researchers, we implemented it in a free, easy to use, open-source software app. We look forward to seeing what new discoveries they might make using this exciting new method.![]()

Benjamin A. Jancovich, PhD Candidate in Behavioural Ecology and Bioacoustics, Casual Academic, UNSW Sydney

This article is republished from The Conversation under a Creative Commons license. Read the original article.